Consider this graphic, showing enrolled patients at each site. It came through on a weekly "Site Newsletter" for a trial I was working on:

I chose this histogram not because it’s particularly bad, but because it’s supremely typical. Don’t get me wrong ... it’s really bad, but the important thing here is that it looks pretty much exactly like every site enrollment histogram in every study I’ve ever worked on.

This is a wasted opportunity. Whether we look at per-site enrollment with internal teams to develop enrollment support plans, or share this data with our sites to inform and motivate them, a good chart is one of the best tools we have. To illustrate this, let’s look at a few examples of better ways to look at the data.

This chart improves on the standard histogram in a few important ways:

- It looks better. This is not a minor point when part of our work is to engage sites and makes them feel like they are part of something important. Actually, this graph is made clearer and more appealing mostly by the removal of useless attributes (extraneous whitespace, background colors, and unhelpful labels).

- It adds patient disposition information. Many graphs – like the one at the beginning of this post – are vague about who is being counted. Does "enrolled" include patients currently being screened, or just those randomized? Interpretations will vary from reader to reader. Instead, this chart makes patient status an explicit variable, without adding to the complexity of the presentation. It also provides a bit of information about recent performance, by showing patients who have been consented but not yet fully screened.

- It ranks sites by their total contribution to the study, not by the letters in the investigator’s name. And that is one of the main reasons we like to share this information with our sites in the first place.

There are many other ways in which essentially the same data can be re-sliced or restructured to underscore particular trends or messages. Here are two that I look at frequently, and often find worth sharing.

Then versus Now

This tornado chart is an excellent way of showing site-level enrollment trajectory, with each sites prior (left) and subsequent (right) contributions separated out. This example spotlights activity over the past month, but for slower trials a larger timescale may be more appropriate. Also, how the data is sorted can be critical in the communication: this could have been ranked by total enrollment, but instead sorts first on most-recent screening, clearly showing who’s picked up, who’s dropped off, and who’s remained constant (both good and bad).

This is especially useful when looking at a major event (e.g., pre/post protocol amendment), or where enrollment is expected to have natural fluctuations (e.g., in seasonal conditions).

Net Patient Contribution

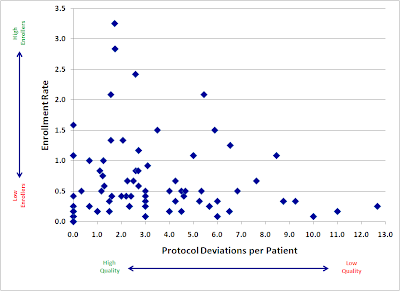

In many trials, site activation occurs in a more or less "rolling" fashion, with many sites not starting until later in the enrollment period. This makes simple enrollment histograms downright misleading, as they fail to differentiate sites by the length of time they’ve actually been able to enroll. Reporting enrollment rates (patients per site per month) is one straightforward way of compensating for this, but it has the unfortunate effect of showing extreme (and, most importantly, non-predictive), variance for sites that have not been enrolling for very long.

As a result, I prefer to measure each site in terms of its net contribution to enrollment, compared to what it was expected to do over the time it was open:

To clarify this, consider an example: A study expects sites to screen 1 patient per month. Both Site A and Site B have failed to screen a single patient so far, but Site A has been active for 6 months, whereas Site B has only been active 1 month.

On an enrollment histogram, both sites would show up as tied at 0. However, Site A’s 0 is a lot more problematic – and predictive of future performance – than Site B’s 0. If I compare them to benchmark, then I show how many total screenings each site is below the study’s expectation: Site A is at -6, and Site B is only -1, a much clearer representation of current performance.

This graphic has the added advantage of showing how the study as a whole is doing. Comparing the total volume of positive to negative bars gives the viewer an immediate visceral sense of whether the study is above or below expectations.

The above are just 3 examples – there is a lot more that can be done with this data. What is most important is that we first stop and think about what we’re trying to communicate, and then design clear, informative, and attractive graphics to help us do that.