It's been six years since Pfizer boldly announced the launch of its "clinical trial in a box". The REMOTE trial was designed to be entirely online, and involved no research sites: study information and consent was delivered via the web, and medications and diaries were shipped directly to patients' homes.

Despite the initial fanfare, within a month REMOTE's registration on ClinicalTrials.gov was quietly reduced from 600 to 283. The smaller trial ended not with a bang but a whimper, having randomized only 18 patients in over a year of recruiting.

Still, the allure of direct to patient clinical trials remains strong, due to a confluence of two factors. First, a frenzy of interest in running "patient centric clinical trials". Sponsors are scrambling to show they are doing something – anything – to show they have shifted to a patient-centered mindset. We cannot seem to agree what this means (as a great illustration of this, a recent article in Forbes on "How Patients Are Changing Clinical Trials" contained no specific examples of actual trials that had been changed by patients), but running a trial that directly engages patients wherever they are seems like it could work.

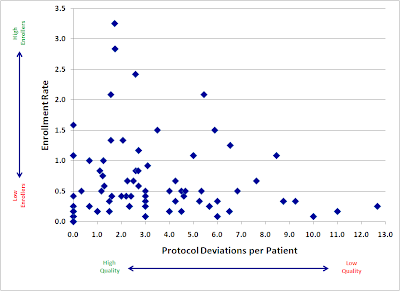

The less-openly-discussed other factor leading to interest in these DIY trials is sponsors' continuing willingness to heap almost all of the blame for slow-moving studies onto their research sites. If it’s all the sites’ fault – the reasoning goes – then cutting them out of the process should result in trials that are both faster and cheaper. (There are reasons to be skeptical about this, as I have discussed in the past, but the desire to drop all those pesky sites is palpable.)

However, while a few proof-of-concept studies have been done, there really doesn't seem to have been another trial to attempt a full-blown direct-to-patient clinical trial. Other pilots have been more successful, but had fairly lightweight protocols. For all its problems, REMOTE was a seriously ambitious project that attempted to package a full-blown interventional clinical trial, not an observational study.

In this context, it's great to see published results of the TAPIR Trial in vasculitis, which as far as I can tell is the first real attempt to run a DIY trial of a similar magnitude to REMOTE.

TAPIR was actually two parallel trials, identical in every respect except for their sites: one trial used a traditional group of 8 sites, while the other was virtual and recruited patients from anywhere in the country. So this was a real-time, head-to-head assessment of site performance.

And the results after a full two years of active enrollment?

- Traditional sites: 49 enrolled

- Patient centric: 10 enrolled

Maybe it’s time to stop blaming the sites? To be fair, they didn’t exactly set the world on fire – and I’m guessing the total cost of activating the 8 sites significantly exceeded the costs of setting up the virtual recruitment and patient logistics. But still, the site-less, “patient centric” approach once again came up astonishingly short.